January 16, 2026

Context, Cognition, and the Poisoned Mushroom Problem: Why AI Readiness Depends on Data Understanding

Marketing

January 16, 2026

Marketing

I notice that artificial intelligence is often called intelligent because it produces fluent language, confident predictions, and increasingly autonomous actions. Yet modern AI systems remain different from human understanding, and that gap shows up as business risk when organizations deploy AI without the context needed to interpret what it is processing.

In Minds, Brains, and Programs (1980), philosopher John Searle introduces the Chinese Room thought experiment. Imagine a person in a room who does not understand Chinese. They receive Chinese symbols through a slot, consult a rulebook written in English, and return the appropriate symbols as output. To someone outside the room, the responses appear fluent. Inside, there is no grasp of meaning, only symbol manipulation.

Searle’s point is that syntactic processing is not the same as semantic understanding. A system can produce correct outputs without knowing what those outputs mean, which matters whenever we treat output quality as proof of comprehension.

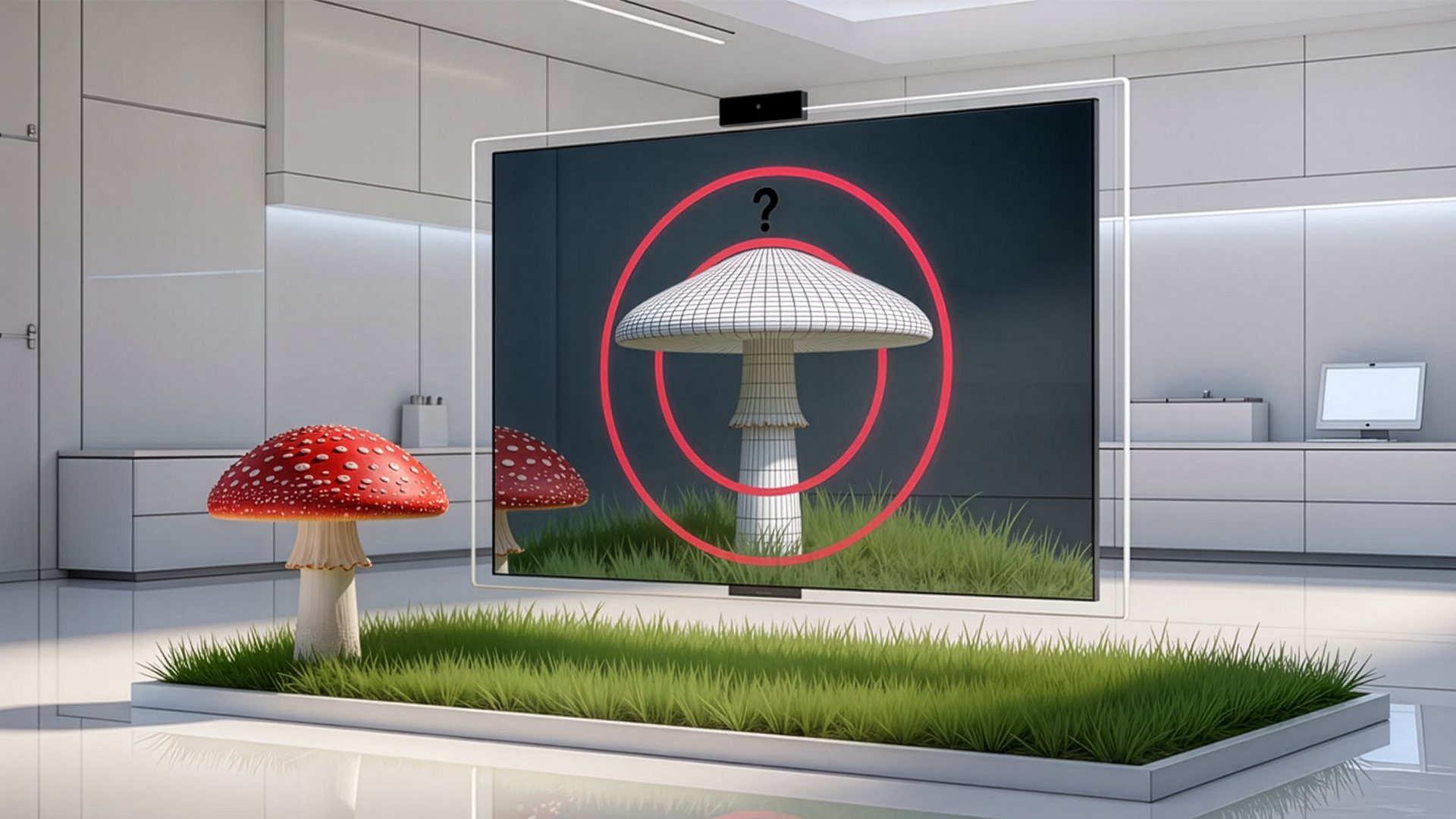

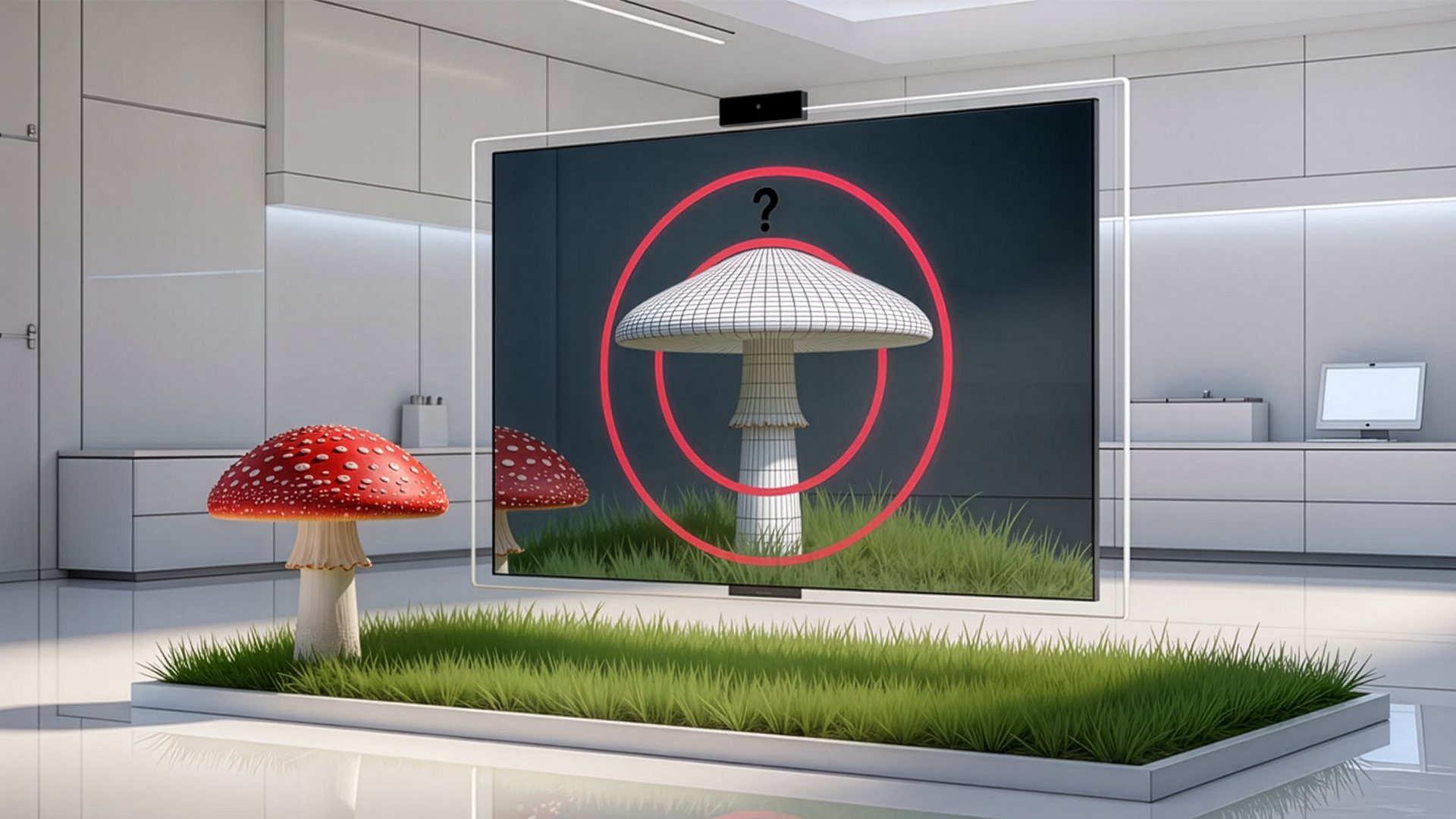

The difference becomes clearer with a mundane but deadly example. Imagine picking a mushroom and asking an AI assistant whether it is edible. The assistant compares patterns and replies, “Yes, it is edible.” You eat it and later get sick and die. The assistant answered a narrow version of the question because it lacked the context that matters most: edible does not necessarily mean safe for a human body. The failure is not fluency, it's interpretation.

A similar failure of context plays out every day in the financial services sector. Consider a multinational bank that deploys an AI enabled data security tool. It scans the environment and flags a dataset containing names, account numbers, and transaction IDs, then labels it as sensitive financial data. From a pattern recognition standpoint, nothing looks wrong.

However, meaning sits in the surrounding details. That same dataset may feed a fraud monitoring workflow that sends updates to a third party analytics provider through a long lived service account. It may include European customer records, be copied into a reporting environment, and be retained well beyond its operational purpose. When the vendor later suffers a breach, the AI can list affected tables and fields, but it cannot answer the questions that matter in the first hours: whose data was exposed, which rules apply, whether access was justified, and how far the data propagated.

This is why many teams feel overinstrumented and underprepared. They collect volumes of alerts and labels, but still struggle to explain impact. What data was exposed? Who owns it? Which obligations apply? Which downstream systems received it? These are not classification problems. They are context problems.

Breach readiness exposes this weakness because speed without meaning is not readiness. An alert only becomes actionable when it is grounded in ownership, purpose, access paths, and real usage.

This is where Searle’s insight meets modern security. Intelligence without understanding is brittle. Automation without context is dangerous. AI systems that cannot ground their outputs in operational meaning risk giving organizations confidence without comprehension.

True AI readiness is less about picking a model and more about building the data context that lets AI operate safely. Context turns raw data into evidence. Before asking AI to reason, organizations need a defensible map of what data exists, where it lives, how it flows, who can access it, and why it’s retained.

This is the gap 1touch.io is designed to address. Instead of treating data as static files to scan and label in isolation, 1touch focuses on contextual intelligence. It scans data in place and builds a living map of sensitive data across hybrid, multicloud, and mainframe environments, tying discovery to identity, access, and workflow context. Sensitive data is not just tagged. It’s situated within the systems, processes, and third party relationships that define its real risk.

With contextual grounding, AI can be applied more safely. Rather than asking a model to guess what data means, teams can provide the context that makes meaning explicit and auditable. Breach readiness improves because incident response teams can determine scope and exposure faster without assembling meaning from disconnected sources.

The mushroom problem disappears when you know the difference between edible and safe. The next phase of AI maturity will not be driven by larger models alone. It will be driven by better grounding. Without it, AI will continue to speak fluently while misunderstanding the world it acts upon. If we scale the Chinese Room across an entire ecosystem without contextual safeguards, eventually someone will eat the mushroom.

Context, Cognition, and the Poisoned Mushroom Problem: Why AI Readiness Depends on Data Understanding

Financial Services

January 16, 2026

The Cyber Attack That Stalled Jaguar Land Rover: A Cautionary Tale of Hyper-Connectivity

October 15, 2025

.webp)

Enable Data Security Posture Management (DSPM) for Your Entire Data Estate

October 8, 2025