January 22, 2026

Why AI-Ready Data Starts With Context at the Entity Level

Mahesh Iyer

January 22, 2026

Mahesh Iyer

As organizations push to adopt AI, the same issue keeps resurfacing in different forms. The technology works, the models perform as expected, yet the results feel thin, brittle, or difficult to trust.

The problem is not access to data. Most organizations already have more data than they know how to manage. The problem is understanding. AI can only reason as well as the context it is given, and too often that context disappears long before a model ever touches the data.

This is what makes AI-ready data different from simply AI-accessible data. Readiness is not about volume or velocity. It is about whether data still reflects the real-world entities and relationships it came from.

Enterprises tend to think of data as rows, tables, and pipelines. Customer records live in one system, transactions in another, support interactions somewhere else. As data moves, it gets flattened. Relationships are lost. Meaning fades.

From a technical perspective, nothing looks wrong. The fields are populated. The pipelines run. The model trains.

But the system no longer understands that two records refer to the same person. It cannot tell that a purchase is tied to a specific campaign, channel, or moment in time. It treats a risk signal today the same way it would have five years ago, even though the surrounding behavior has changed completely.

This is where AI starts to fail quietly. Not because the algorithm is weak, but because the data no longer carries the context required to interpret it. The output looks confident, but it lacks depth.

Entity-level context means organizing data around the things that actually exist in the business. Customers. Products. Suppliers. Employees. Accounts. It means preserving not just attributes, but relationships, history, and change over time.

When data is grounded this way, the questions change. Instead of asking what a row says, you can ask what is known about a specific customer right now, how they have behaved in the past, and how their relationships have evolved.

That shift matters because many of the most valuable AI use cases depend on reasoning, not just prediction. Personalization, fraud detection, risk assessment, and real-time decisions all require an understanding of how entities relate to one another and how those relationships shift.

Without that grounding, AI is left matching patterns across fragments.

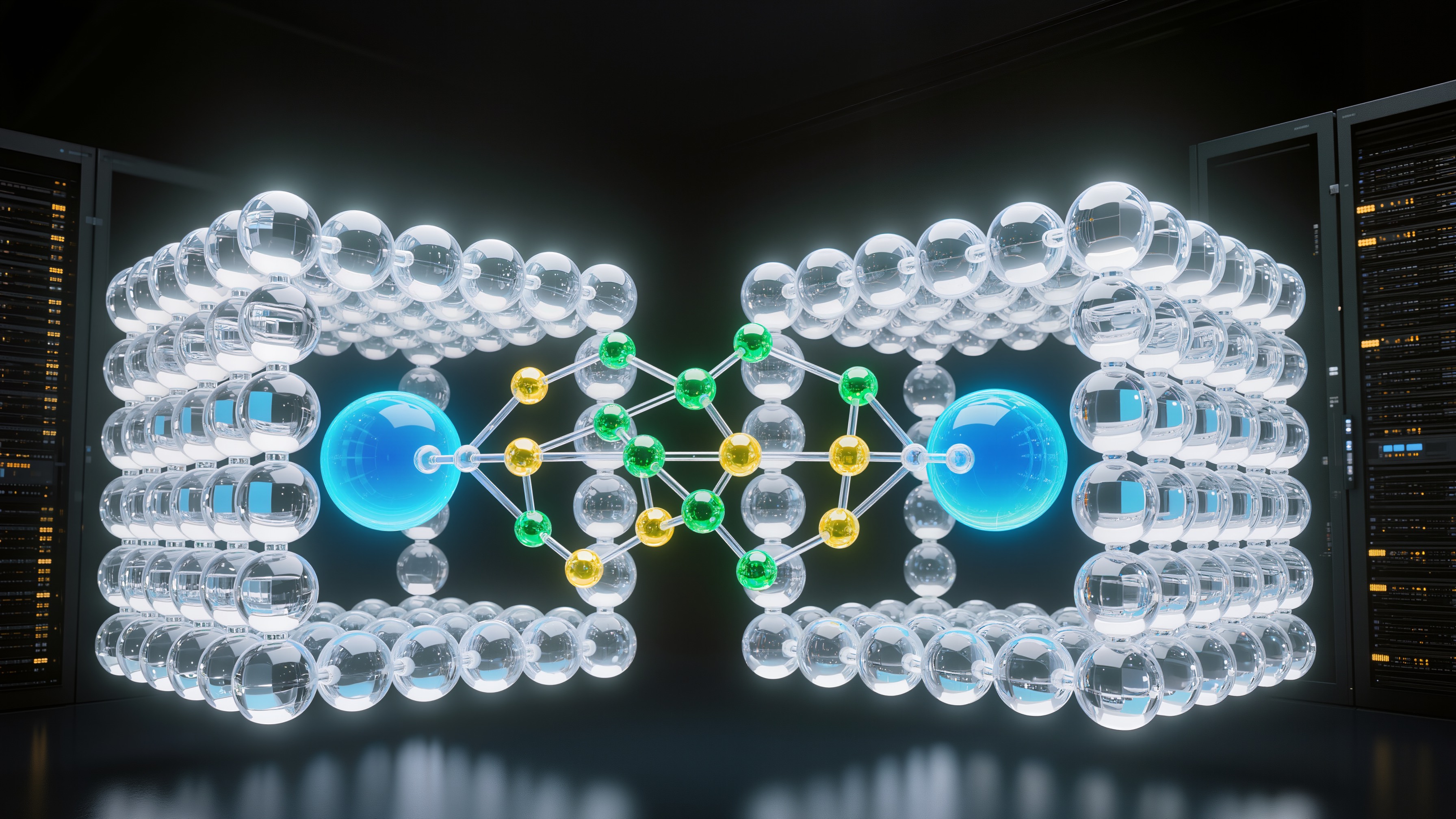

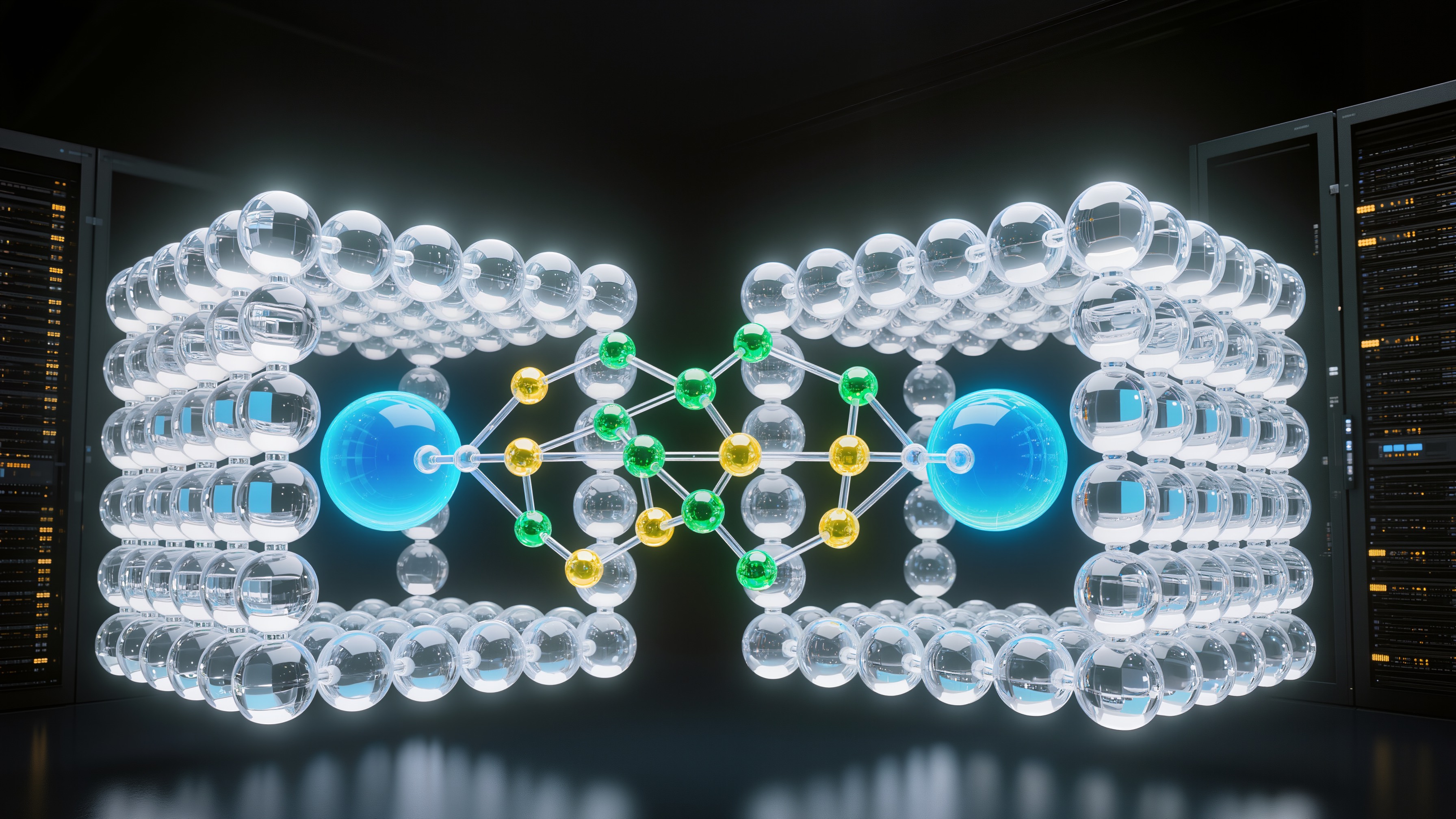

This is where semantic knowledge graphs become practical, not theoretical.

Rather than forcing data into flat structures, a knowledge graph models it as a network of entities connected by relationships that carry meaning. Time matters. Behavior matters. Context matters. Relationships can strengthen, weaken, or disappear altogether as reality changes.

When structured and unstructured data are unified this way, AI gains something it usually lacks: a coherent view of the world it is acting on. Decisions can be traced back to facts. Outputs can be explained. Changes in behavior make sense because the surrounding context is visible.

The goal is not to mimic human intelligence, but to give AI enough grounding to avoid shallow interpretation.

In customer-facing industries, data is almost always fragmented. Transaction systems, CRM platforms, support tools, and digital channels each hold a partial view. When those signals are connected around a single customer entity, patterns begin to emerge that were invisible before.

AI can respond to behavior shifts instead of static segments. Churn prediction improves because it reflects relationships over time, not isolated actions. Offers adjust because life events and usage patterns are visible, not inferred.

In fraud and risk management, the value of context becomes even clearer. Fraud does not happen in isolation. It unfolds across accounts, devices, transactions, and time. When AI can reason across those connections, it stops treating every transaction as an independent event. False positives drop. Detection accelerates. The system understands the difference between coincidence and coordination.

The same logic applies to supply chains. Disruptions rarely come from a single failure. They propagate through dependencies. When suppliers, logistics partners, products, locations, and events are modeled as connected entities, AI can see how risk spreads and where intervention matters most. Decisions become proactive rather than reactive.

Even everyday knowledge work improves. Search stops being about keywords and starts being about intent. Instead of returning documents, AI can answer questions that span systems and domains because the underlying data is already connected in a meaningful way.

AI readiness is often framed as a race to adopt new models. In practice, it is a discipline problem.

This is where 1touch.io fits naturally into the picture. Its focus is not on abstract AI capability, but on grounding data in reality. By discovering, classifying, and contextualizing data at the entity level, it builds a living map of how data actually exists and flows across the organization.

Data is not treated as isolated files or static tables. It is connected to identity, access, usage, and purpose. Context is explicit rather than assumed. Governance and privacy are preserved because meaning is visible, not reconstructed after the fact.

When context is clear, AI becomes safer to apply. Decisions are explainable because they are anchored in evidence. Insights scale because they are grounded in how the business actually operates.

AI does not need more confidence. It needs better understanding. And that starts with data that still knows what it represents.

Why Traditional DSPM Solutions Fall Short in an AI-Driven World

Data Security

All Industries

January 30, 2026

Why AI-Ready Data Starts With Context at the Entity Level

Data Security

January 22, 2026

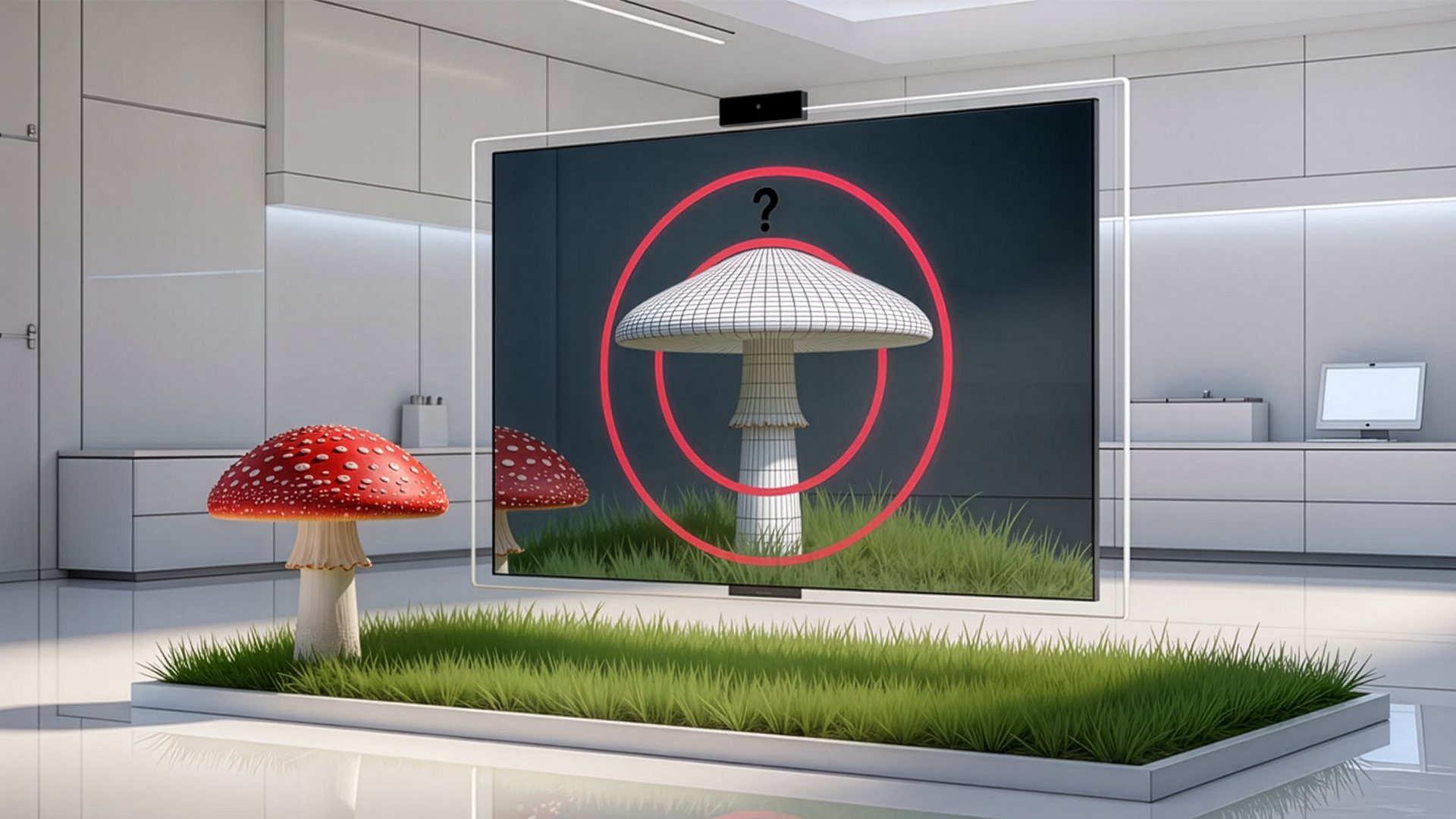

Context, Cognition, and the Poisoned Mushroom Problem: Why AI Readiness Depends on Data Understanding

Financial Services

January 16, 2026